LaminDB is an open-source data framework for biology to query, trace, and validate datasets and models at scale. With one API, you get: lakehouse, lineage, feature store, ontologies, bio-registries & formats.

Why?

Reproducing results and understanding how a dataset or model was created is now more important than ever, but was a struggle even before the age of agents. Training models across thousands of datasets — from LIMS and ELNs to orthogonal assays and cross-team silos — is now a big learning opportunity, but has historically been impossible. While code has git and tables have data warehouses, biological data has lacked a dedicated, API-first management framework to ensure quality and queryability.

LaminDB fills the gap with a lineage-native data lakehouse that understands bio-registries and formats (AnnData, .zarr, …) to enable scaled learning operations.

It provides queries across many datasets with enough freedom to maintain high-paced R&D while automating rich context on top of versioning, change management, and other industry standards.

Highlights:

- lineage → track inputs & outputs of notebooks, scripts, functions & pipelines with a single line of code

- lakehouse → manage, monitor & validate schemas; query across many datasets

- feature store → manage features & labels; leverage batch loading

- FAIR datasets → validate & annotate

DataFrame,AnnData,SpatialData,parquet,zarr, … - LIMS & ELN → manage experimental metadata, ontologies & markdown notes

- unified access → single API for storage locations (local, S3, GCP, …), SQL databases (Postgres, SQLite) & ontologies

- reproducible → auto-track source code & compute environments with data, code & report versioning

- zero lock-in & scalable → runs in your infrastructure; not a client for a rate-limited REST API

- simple → just

pip installa Python package, no complicated setup - integrations → vitessce, nextflow, redun, and more

- extensible → create custom plug-ins based on the Django ORM

If you want a GUI: LaminHub is a data collaboration hub built on LaminDB similar to how GitHub is built on git.

Who uses it?

Scientists & engineers in pharma, biotech, and academia, including:

- Pfizer – A global BigPharma company with headquarters in the US

- Ensocell Therapeutics – A BioTech with offices in Cambridge, UK, and California

- DZNE – The National Research Center for Neuro-Degenerative Diseases in Germany

- Helmholtz Munich – The National Research Center for Environmental Health in Germany

- scverse – An international non-profit for open-source omics data tools

- The Global Immunological Swarm Learning Network – Research hospitals at U Bonn, Harvard, MIT, Stanford, ETH Zürich, Charite, Mount Sinai, and others

Copy summary.md into an LLM chat and let AI explain or read the docs.

Install the Python package:

pip install lamindbYou can browse public databases at lamin.ai/explore. To query laminlabs/cellxgene, run:

import lamindb as ln

db = ln.DB("laminlabs/cellxgene") # a database object for queries

df = db.Artifact.to_dataframe() # a dataframe listing datasets & modelsTo get a specific dataset, run:

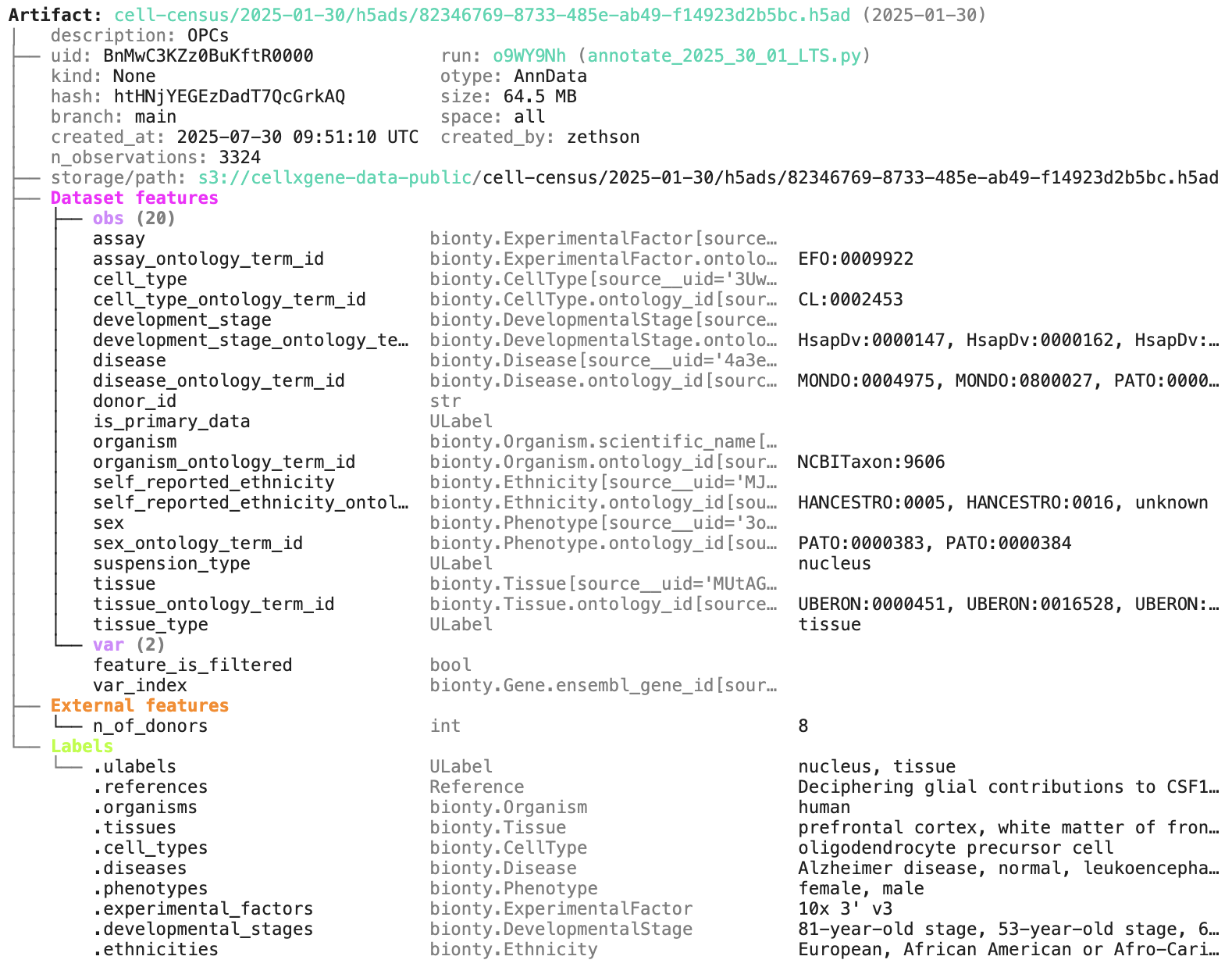

artifact = db.Artifact.get("BnMwC3KZz0BuKftR") # a metadata object for a dataset

artifact.describe() # describe the context of the datasetAccess the content of the dataset via:

local_path = artifact.cache() # return a local path from a cache

adata = artifact.load() # load object into memory

accessor = artifact.open() # return a streaming accessorYou can query 14 built-in registries (Artifact, Storage, Feature, Record, etc.) and additional registries via plug-ins (e.g. in bionty, 13 registries for biological entities via Disease, CellType, Tissue, etc. mapping >20 public ontologies), for example:

diseases = db.bionty.Disease.lookup() # a lookup object to auto-complete diseases

df = db.Artifact.filter(diseases=diseases.alzheimer_disease).to_dataframe() # filter by fieldsYou can create a LaminDB instance at lamin.ai and invite collaborators. To connect to a remote instance, run:

lamin login

lamin connect account/nameIf you prefer to work with a local SQLite database (no login required), run this instead:

lamin init --storage ./quickstart-data --modules biontyOn the terminal and in a Python session, LaminDB will now auto-connect.

To save a file or folder from the command line, run:

lamin save myfile.txt --key examples/myfile.txtTo sync a file into a local cache (artifacts) or development directory (transforms), run:

lamin load --key examples/myfile.txtRead more: docs.lamin.ai/cli.

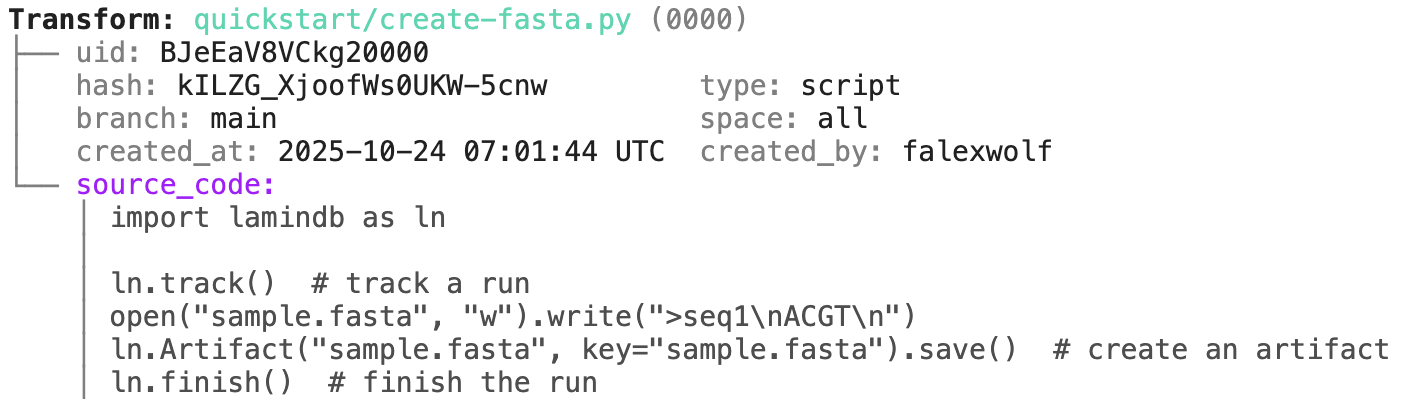

To create a dataset while tracking source code, inputs, outputs, logs, and environment:

import lamindb as ln

# → connected lamindb: account/instance

ln.track() # track code execution

open("sample.fasta", "w").write(">seq1\nACGT\n") # create dataset

ln.Artifact("sample.fasta", key="sample.fasta").save() # save dataset

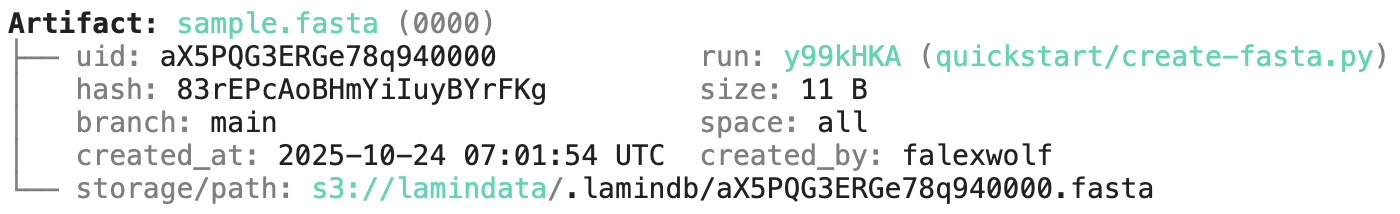

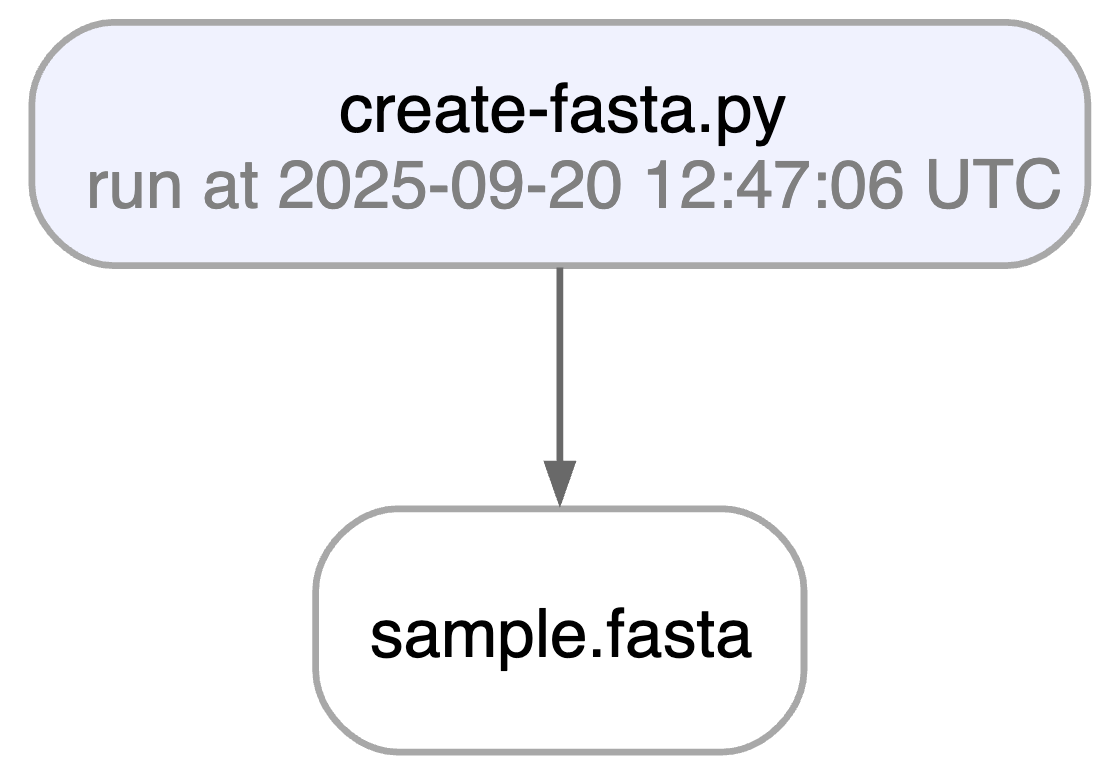

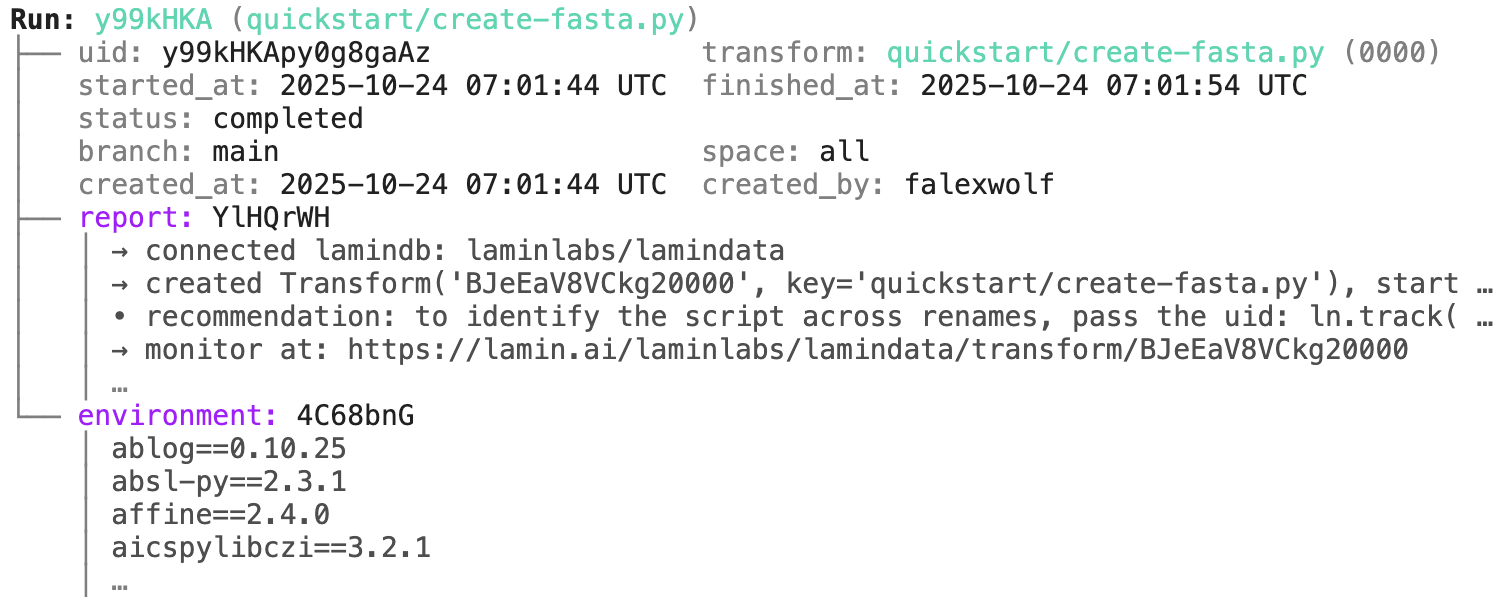

ln.finish() # mark run as finishedRunning this snippet as a script (python create-fasta.py) produces the following data lineage:

artifact = ln.Artifact.get(key="sample.fasta") # get artifact by key

artifact.describe() # general context of the artifact

artifact.view_lineage() # fine-grained lineageHere is how to access the generating run and transform objects programmatically:

run = artifact.run # get the run object

transform = artifact.transform # get the transform objectYou can label an artifact by running:

my_label = ln.ULabel(name="My label").save() # a universal label

project = ln.Project(name="My project").save() # a project label

artifact.ulabels.add(my_label)

artifact.projects.add(project)Query for it:

ln.Artifact.filter(ulabels=my_label, projects=project).to_dataframe()You can also query by the metadata that lamindb automatically collects:

ln.Artifact.filter(run=run).to_dataframe() # query artifacts created by a run

ln.Artifact.filter(transform=transform).to_dataframe() # query artifacts created by a transform

ln.Artifact.filter(size__gt=1e6).to_dataframe() # query artifacts bigger than 1MBIf you want to include more information into the resulting dataframe, pass include.

ln.Artifact.to_dataframe(include=["created_by__name", "storage__root"]) # include fields from related registriesNote: The query syntax for DB objects and for your default database is the same.

You can annotate datasets and samples with features. Let's define some:

from datetime import date

ln.Feature(name="gc_content", dtype=float).save()

ln.Feature(name="experiment_note", dtype=str).save()

ln.Feature(name="experiment_date", dtype=date, coerce=True).save() # accept date stringsDuring annotation, feature names and data types are validated against these definitions:

artifact.features.add_values({

"gc_content": 0.55,

"experiment_note": "Looks great",

"experiment_date": "2025-10-24",

})Query for it:

ln.Artifact.filter(experiment_date="2025-10-24").to_dataframe() # query all artifacts annotated with `experiment_date`If you want to include the feature values into the dataframe, pass include.

ln.Artifact.to_dataframe(include="features") # include the feature annotationsYou can create records for the entities underlying your experiments: samples, perturbations, instruments, etc., for example:

sample = ln.Record(name="Sample", is_type=True).save() # create entity type: Sample

ln.Record(name="P53mutant1", type=sample).save() # sample 1

ln.Record(name="P53mutant2", type=sample).save() # sample 2Define features and annotate an artifact with a sample:

ln.Feature(name="design_sample", dtype=sample).save()

artifact.features.add_values({"design_sample": "P53mutant1"})You can query & search the Record registry in the same way as Artifact or Run.

ln.Record.search("p53").to_dataframe()You can also create relationships of entities and edit them like Excel sheets in a GUI via LaminHub.

If you change source code or datasets, LaminDB manages versioning for you.

Assume you run a new version of our create-fasta.py script to create a new version of sample.fasta.

import lamindb as ln

ln.track()

open("sample.fasta", "w").write(">seq1\nTGCA\n") # a new sequence

ln.Artifact("sample.fasta", key="sample.fasta", features={"design_sample": "P53mutant1"}).save() # annotate with the new sample

ln.finish()If you now query by key, you'll get the latest version of this artifact with the latest version of the source code linked with previous versions of artifact and source code are easily queryable:

artifact = ln.Artifact.get(key="sample.fasta") # get artifact by key

artifact.versions.to_dataframe() # see all versions of that artifactHere is how you ingest a DataFrame:

import pandas as pd

df = pd.DataFrame({

"sequence_str": ["ACGT", "TGCA"],

"gc_content": [0.55, 0.54],

"experiment_note": ["Looks great", "Ok"],

"experiment_date": [date(2025, 10, 24), date(2025, 10, 25)],

})

ln.Artifact.from_dataframe(df, key="my_datasets/sequences.parquet").save() # no validationTo validate & annotate the content of the dataframe, use the built-in schema valid_features:

ln.Feature(name="sequence_str", dtype=str).save() # define a remaining feature

artifact = ln.Artifact.from_dataframe(

df,

key="my_datasets/sequences.parquet",

schema="valid_features" # validate columns against features

).save()

artifact.describe()You can filter for datasets by schema and then launch distributed queries and batch loading.

To validate an AnnData with built-in schema ensembl_gene_ids_and_valid_features_in_obs, call:

import anndata as ad

import numpy as np

adata = ad.AnnData(

X=pd.DataFrame([[1]*10]*21).values,

obs=pd.DataFrame({'cell_type_by_model': ['T cell', 'B cell', 'NK cell'] * 7}),

var=pd.DataFrame(index=[f'ENSG{i:011d}' for i in range(10)])

)

artifact = ln.Artifact.from_anndata(

adata,

key="my_datasets/scrna.h5ad",

schema="ensembl_gene_ids_and_valid_features_in_obs"

)

artifact.describe()To validate a spatialdata or any other array-like dataset, you need to construct a Schema. You can do this by composing the schema of a complicated object from simple pandera-style schemas: docs.lamin.ai/curate.

Plugin bionty gives you >20 public ontologies as SQLRecord registries. This was used to validate the ENSG ids in the adata just before.

import bionty as bt

bt.CellType.import_source() # import the default ontology

bt.CellType.to_dataframe() # your extendable cell type ontology in a simple registryRead more: docs.lamin.ai/manage-ontologies.

LaminDB integrates well with computational workflow/pipeline managers, e.g. with Nextflow or redun: docs.lamin.ai/pipelines

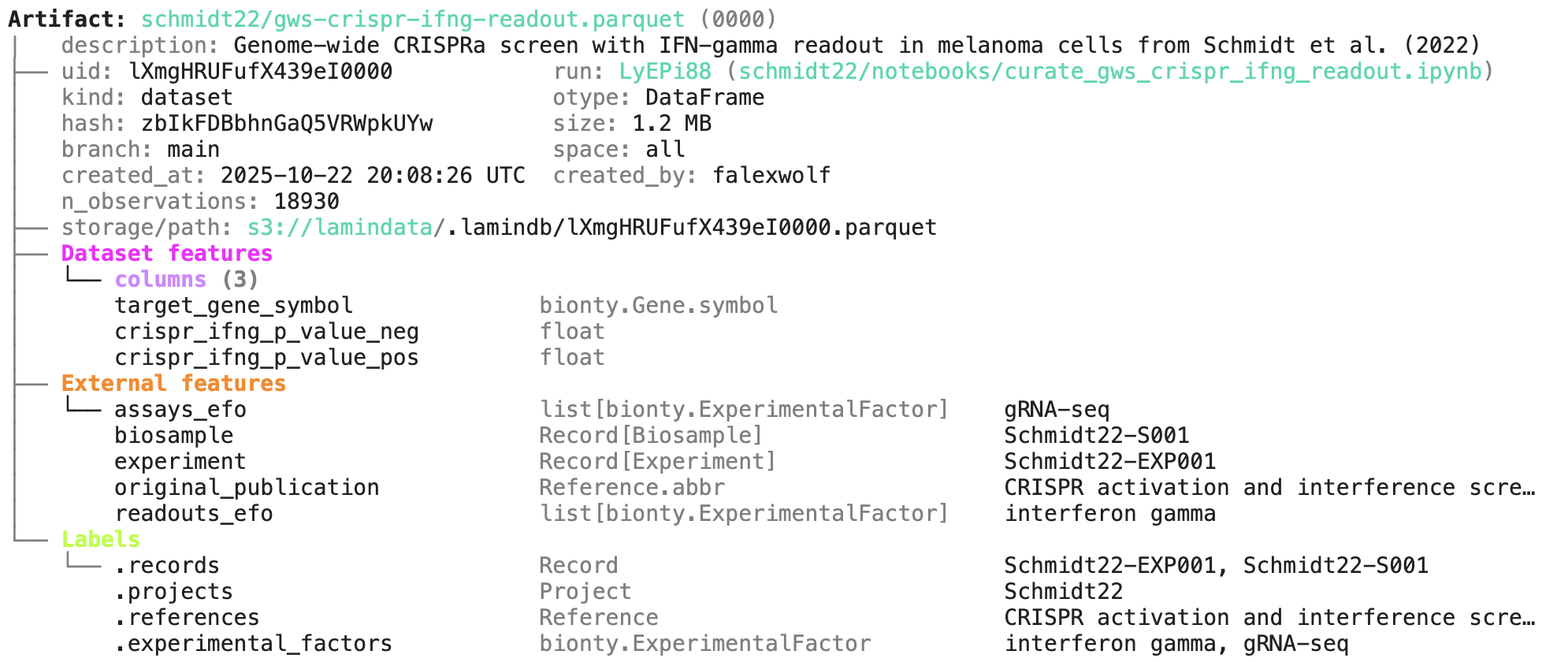

In some cases, LaminDB can offer a simpler alternative. In github.com/laminlabs/schmidt22 we manage several workflows, scripts, and notebooks to re-construct the project of Schmidt el al. (2022). A phenotypic CRISPRa screening result is integrated with scRNA-seq data. Here is one of the input artifacts:

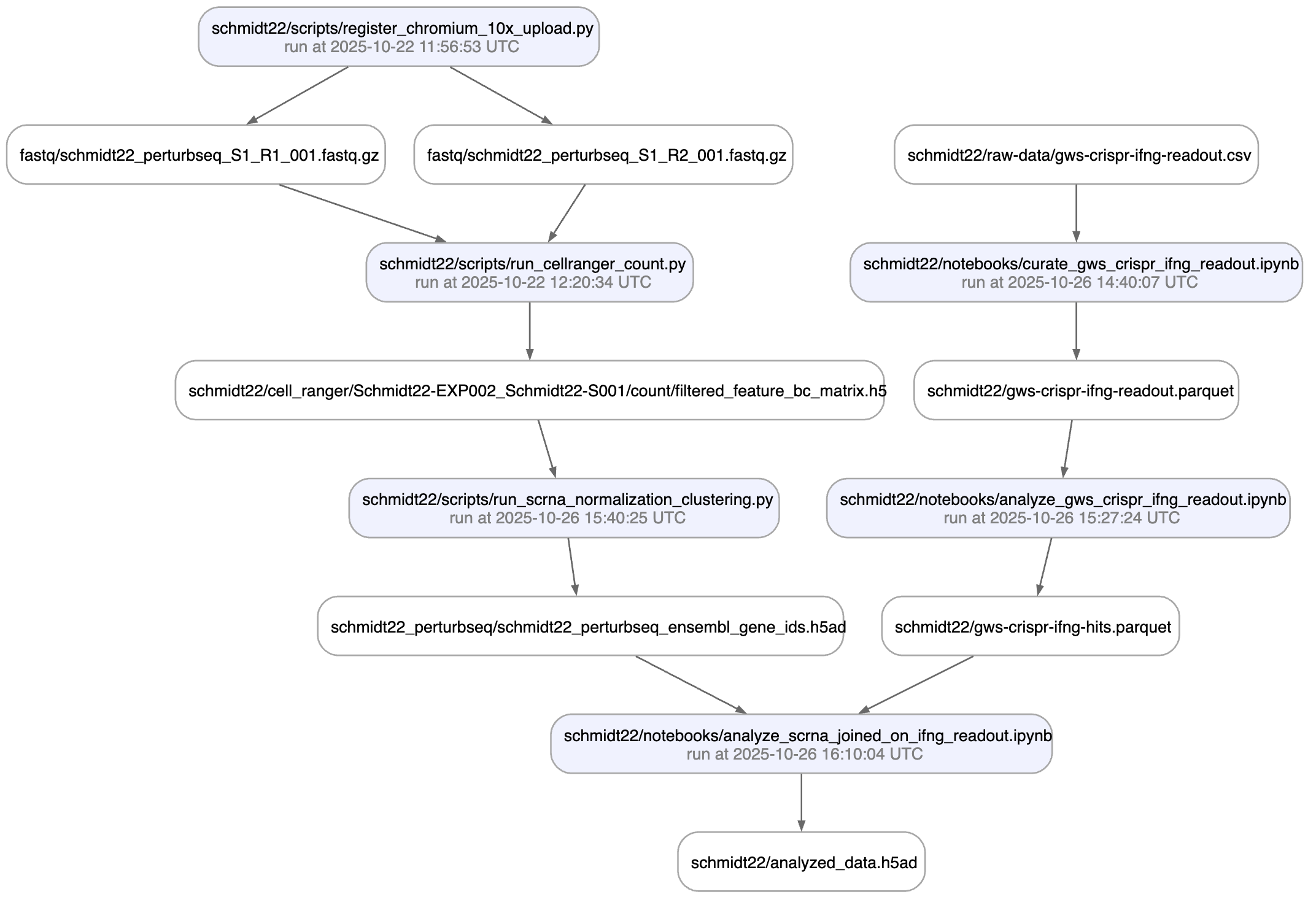

And here is the lineage of the final result:

You can explore it here.